GPU-accelerated local workstation for DataScience Workflows

The importance of developing data science

workflows enhances productivity by optimizing cost and improving user

experience. The data going through an iterative development makes the workflow

achieve data exploration and model prototyping.

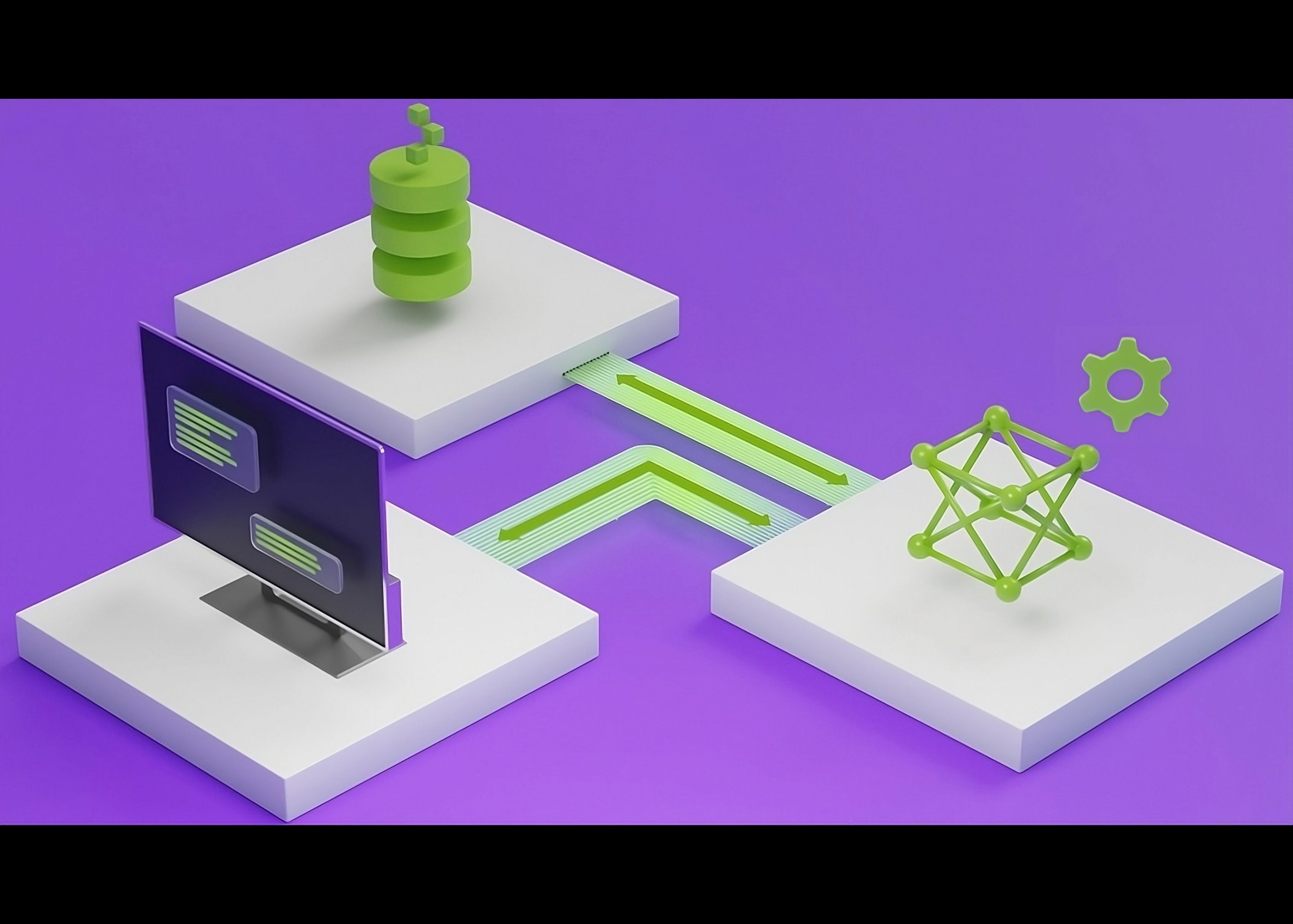

The data science workflow comprises of 3 different

skill sets and personas,

- Â Data Engineer - Responsible for Data ingestion,

storage and cleansing of the data to make data ready for the data

scientists

- Data Scientist – The data scientist gets the cleaned, authentic, and quality data to develop features, build model prototypes, and test the model on real datasets using various algorithms to increase accuracy

depending on the business use case. The result of this step will be the

model ready for the production environment

- ML Engineers – Their role is to operationalize data

processing into production, deploying models created out of data into the

production system. In production ML engineers constantly monitor

production model performance and accuracy.

It is found that, to improve productivity, around

90% of the time is spent in experimentation, data exploration, and model

prototyping stages. Data science workflow is iterated to achieve feature

engineering, model selection, and hyper-parameters to finally select a model

that meets all requirements and goals for the business use cases.

Limitations with traditional development setup

with CPU workstation or on Public CloudÂ

- Higher cloud operational costs on data training and

experimentation

- Lack of resource availability or waiting time on CPU

workstation and limited compute processing

- Security and vendor lock-in on training data in the

cloud environment

- Â Infra support and maintenance on the cloud incur

operational costs

So, to improve productivity and operational

costs, the data exploration and model prototyping can happen on a

GPU-accelerated setup on a local workstation. The other two steps, full

model training and model scoring with real data can take place remotely as per

business requirements.

Advantages of GPU-accelerated local workstation

for data science workflows

- Numerous experimentations on model prototyping

- GPU-accelerated workstation has 20x more power than CPU

for processing large datasets in training

- Reducing cloud cost and a positive ROI on a local

workstationÂ

- Increased productivity in processing complex data for

model building and the model can be tested on real data on production remotely

The Data Science Stack consists of Drivers,

CUDA-X, and GPU-accelerated SDKs and frameworks. The NGC (Nvidia GPU Cloud)

simplifies and accelerates end-to-end workflows, which are comprised of,

- Containers for high-performance computing, deep

learning, and Machine learning

- Pre-trained models for NLP, Vision, DLRM and many

others

- Industry application frameworks like Clara, Jarvis,

Issac

- Helm Charts for Triton inference server, GPU operator

on Kubernetes Cluster

- It serves the purpose of hosting on On-premises, Cloud,

Hybrid-cloud or edge infrastructure