Benchmarking a model on the NVIDIA Triton Inference Server

In todays machine learning and

artificial intelligence, building models from experimentation to production,

model deployment and inference are key components in turning cutting-edge

models into real-world applications.

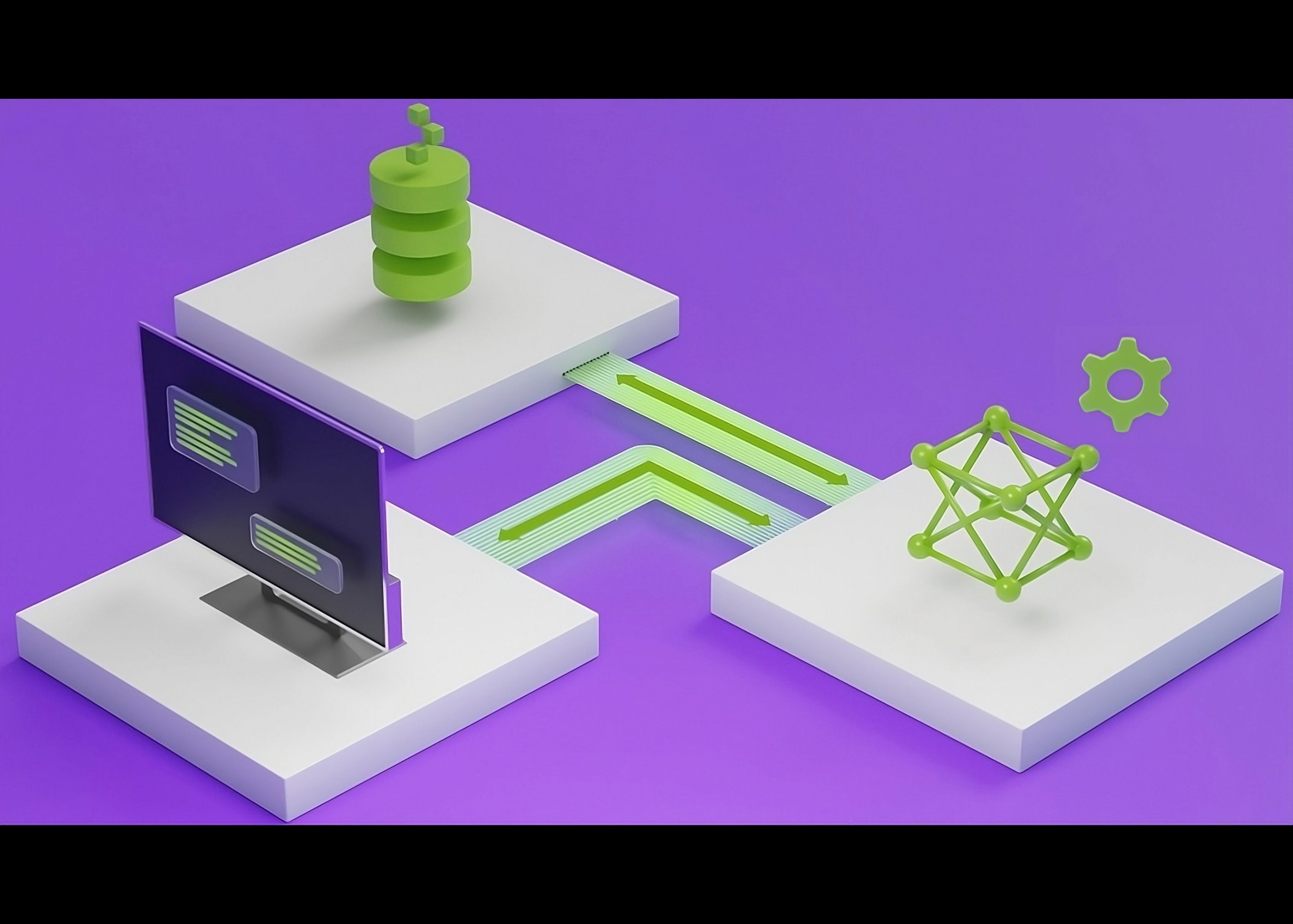

The Triton inference server supports

a wide range of frameworks, including TensorFlow, PyTorch, ONNX, and more,

enabling high-performance, multi-framework model serving. Benchmarking the

performance of inference workloads on Triton is essential to understanding the

key factors under different use cases and helps optimize performance for the

specific use case.

The key factors in benchmarking

include performance, flexibility, low latency, concurrency, maintainability,

reusability, throughput, scale, deployment, and many others. Â

The Triton inference server

maintains the model key factors in production.

Â

It supports key features

- Model management(scale-in, scale-out of models into

servers cluster), versioning

- Dynamic batching(grouping of client requests before

sending them to the inference server)

- Model Orchestration on the GPUs

- Metrics or monitoring.

- Deploying multiple models on the same GPU or GPU clusters

The experimental setup of a model on

Triton

A high GPU server, CPU machine as a

client to send requests. Client request queries as HTTP protocol, optional to

include/excluding Pre/post-processing times, optimization using tensor RT,

Plots and Graphs to present latency benchmarks.

Â

There are various factors in

benchmarking on different-sized models

- Latency and Throughput v/s Concurrency(with dynamic

batching and without dynamic batching)

- Performance v/s Sequence length on Tensorflow/PyTorch

framework

- Performance v/s Batch size (various dynamic batch size

requests)

- Performance v/s Different Models and sizes(Base model,

Large models and huge models), transformer model, non-transformer models

- Serving multiple models of the same type(transformer,

non-transformer models)

- Upgraded hardware versions of GPU

Â

Conclusion

Benchmarking the NVIDIA Triton

Inference Server is an essential step in ensuring that the models are running

optimally in production environments. By measuring key performance metrics such

as latency, throughput, and resource utilization, one can identify bottlenecks

and optimize the server performance.

Tritons ability to handle multiple

models, support different frameworks, and scale across hardware makes it an

ideal platform for deploying AI applications at scale. Whether it is working

with a single model or orchestrating a complex multi-model pipeline,

benchmarking with Triton helps deliver real-world performance metrics to ensure

that the model meets the demands of the application.