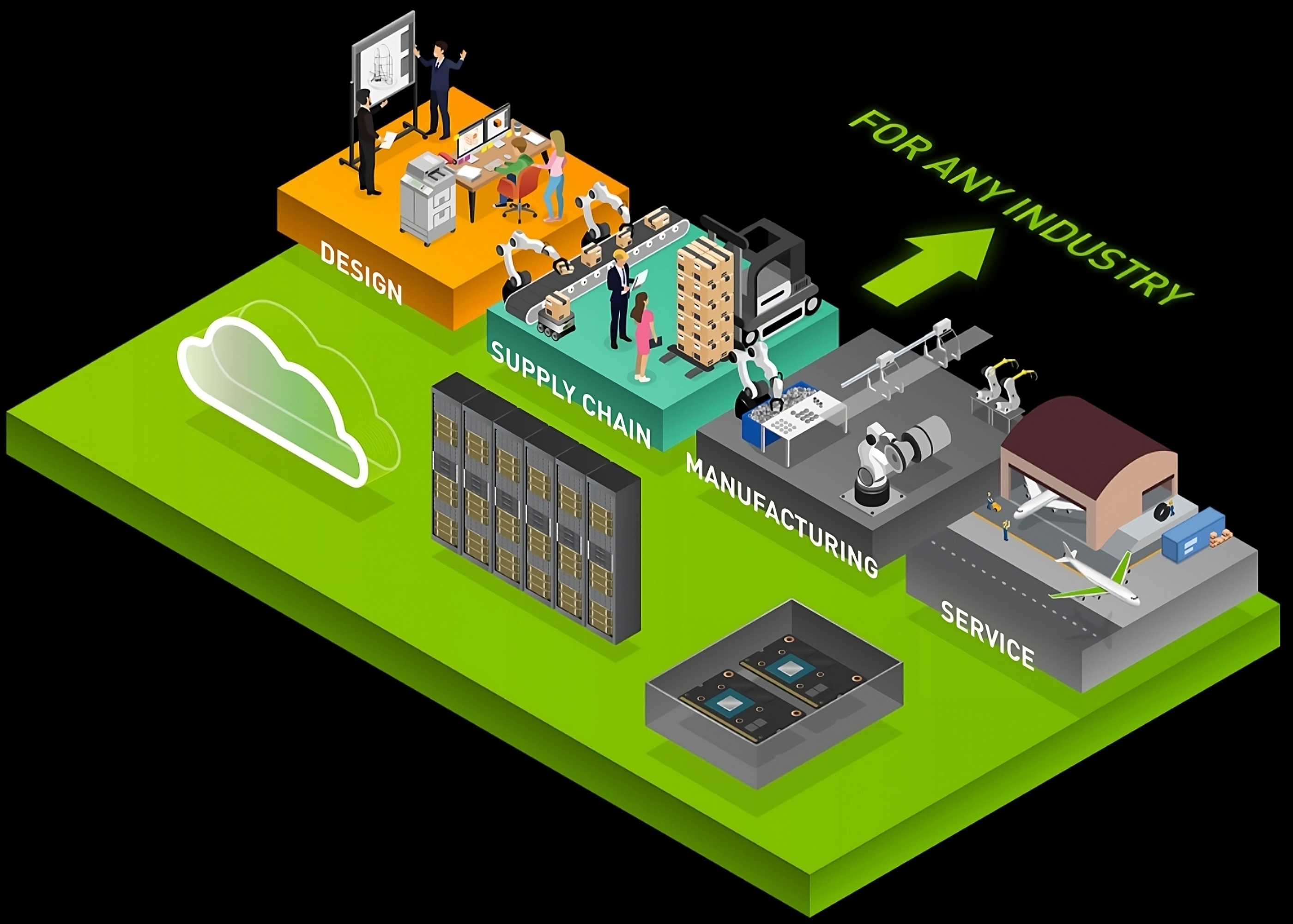

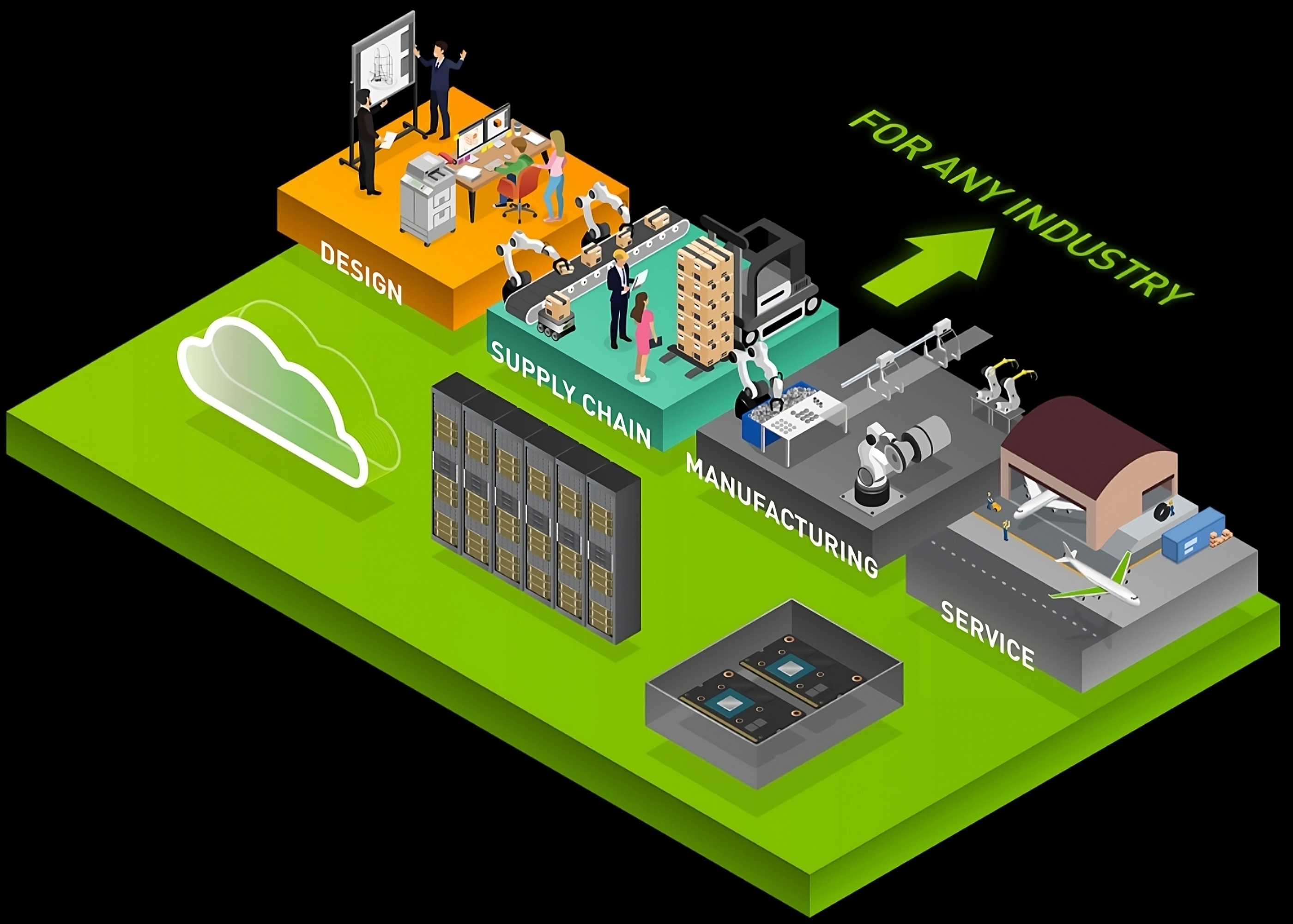

Building future AI factories

Designing a NVIDIA AI Platform

Nvidia provides a leading platform for AI to build a full-stack AI platform. It is not just the GPU that contributes to creating an AI platform; it requires a suite of computing infrastructure, data processing units, Algorithms, AI frameworks, AI application frameworks and networking capabilities. The AI platform enhances and accelerates compute power, scalability, data management, security, optimization, efficiency, low latency, automation MLOps and many more. These factors should be able to host multiple domains with a suitable OS suite for each domain like NVIDIA HPC, NVIDIA AI enterprise and NVIDIA Omniverse. The AI stack also brings the NVIDIA application frameworks, Holoscan, Metropolis, Issac, Drive, Avatar, Nemo and many more.

What are

AI factories?

Now, this

high-performance AI computing platform helps in building AI factories.

Typically, in a factory, a kind of raw material is fed from one side and a

finished product comes from the other side. Here, in the AI factory, the data

with computing AI platform is the input and the intelligent tokens come out as

an output. Data centres are the cost structure of the AI factories in the

business. This AI computing platform is a highly purpose-built and optimized,

can process data which can refine the processed data into models, training the

models and results in monetizable tokens with great scale and efficiency.

This is achieved through redefining the entire computing stack from CPU to GPU,

networking, coding algorithms, and ML to building GenAI apps.

These digital intelligence tokens produced from AI factories are transformed

into some other kind of intelligent response or action from a digital system.

AI

factories compounding benefits

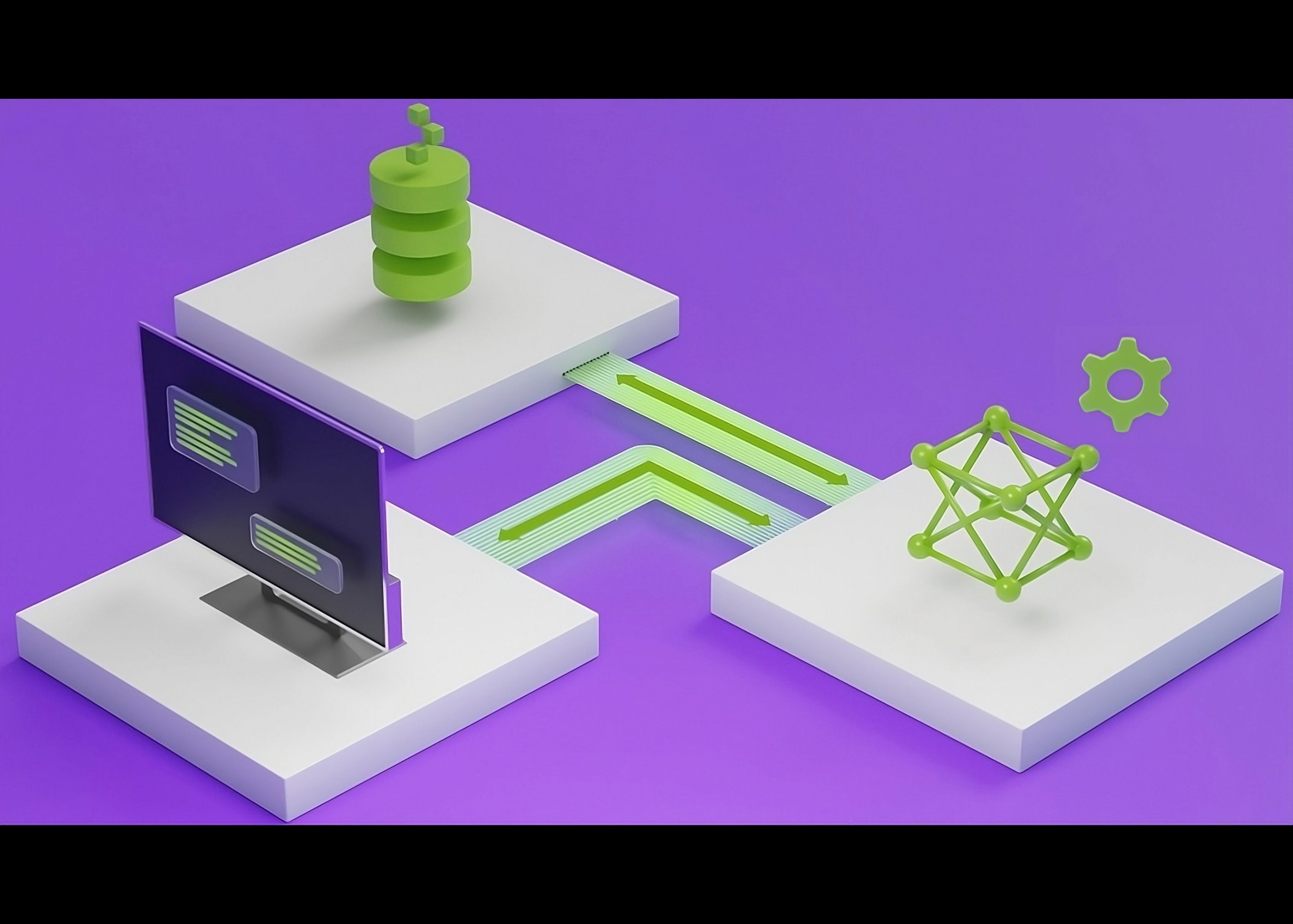

The AI factories have compounding benefits when built right underneath

platform architecture which is comprised of

1. Enterprises selecting

the right combination of GPUs, CPUs, and NVLINKS, as a computing hardware

2. Network components like Infiniband, Ethernet

3. Inference Servers, tools, frameworks and deploying as NVIDIA inference

microservices (NIMs)